A course introducing customer support agents to Quality Assurance (QA) principles and practices. Designed to demystify QA processes, rubrics, and evaluation criteria, this interactive training empowers agents to understand how their interactions are assessed and apply QA insights to their day-to-day work.

- Responsibilities: Quiz Design, Gamification, Visual Design, Interaction Design, eLearning Development, Storyboarding

- Tools Used: Storyline 360, Adobe Photoshop, Rise, Miro

- Target Audience: Customer Support Agents

Project Overview

In collaboration with our QA team, I was part of a project to develop a structured training program to introduce agents to QA. The goal was to familiarize agents with the evaluation criteria used in ticket assessments, making QA approachable and helpful rather than intimidating. The course covered key evaluation points, how agents can meet these expectations, and how to interpret QA feedback constructively.

The course featured multiple components:

- eLearning: An introductory module in Rise to cover QA fundamentals.

- Practice: Agents practiced using the QA tool where they view evaluations and feedback on real tickets.

- Virtual Class: An interactive Miro session, with group activities and discussions, designed to encourage engagement and reflection.

- Gamified Final Quiz: An engaging, gamified Storyline quiz where agents test their knowledge and get feedback in real time.

Storyline Quiz Design

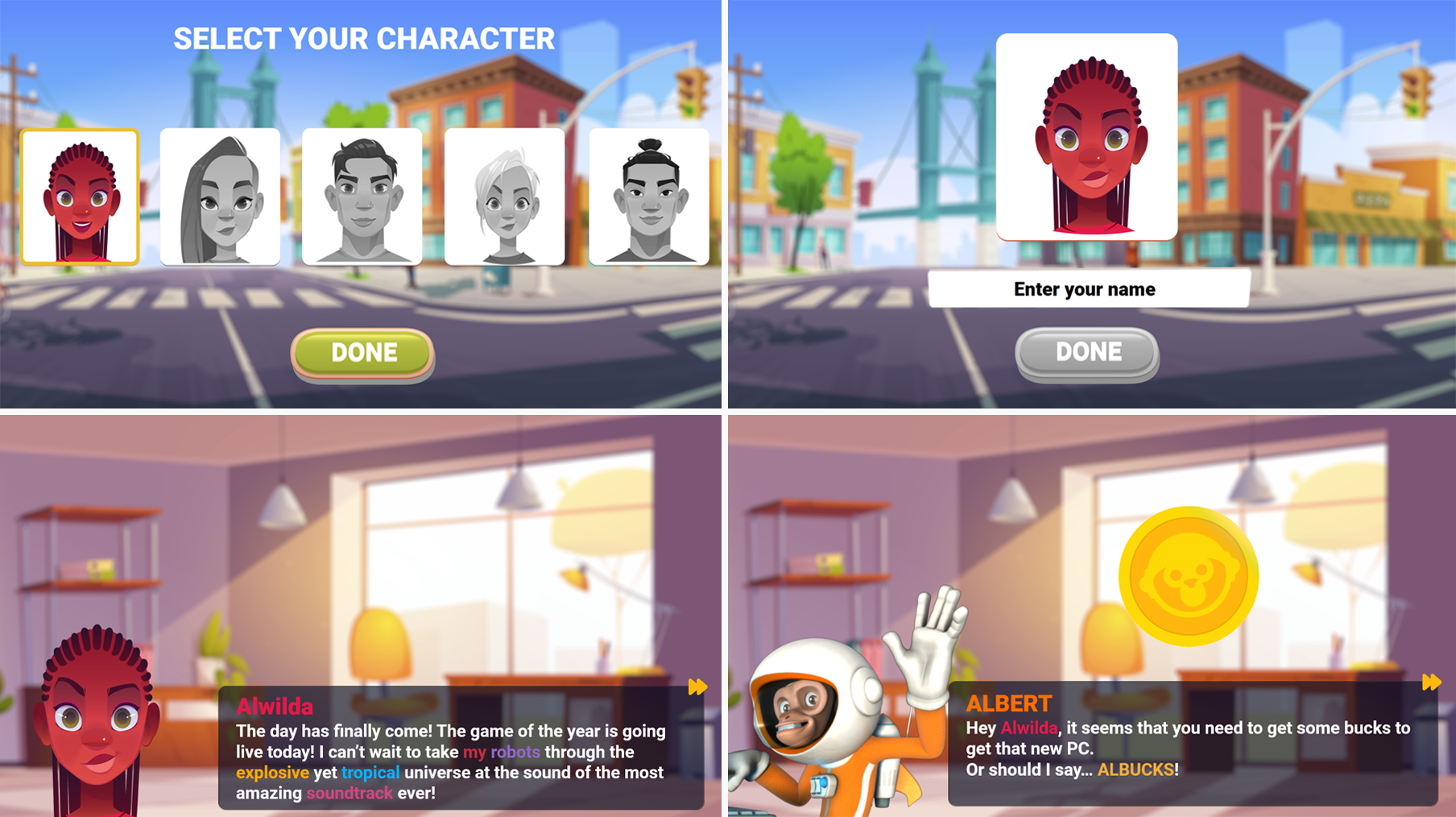

My primary task was designing the final quiz in a way that incorporated a conversational and gamified approach to put agents at ease. To create a supportive environment, I introduced Space Ape’s mascot, Albert, as the quiz guide. The quiz design mimicked a “build-your-own-PC” challenge, a theme already familiar to agents through previous QA team interactions, which created a seamless narrative continuation.

In this quiz, agents earn “Albucks” (Albert-themed currency) for correct answers, which they then use to unlock custom PC parts. Agents could personalize their final PC build, choosing components based on their Albuck balance, making it both engaging and informative. By answering questions correctly, they earned more Albucks, leading to more customization options and reinforcing the importance of accurate responses. Albert offered supportive feedback after each answer, providing clear explanations and encouraging reflection.

Interactive Elements and Visuals

Since this was a general QA course for agents supporting multiple games, the visual design couldn’t be specific to any single game. This presented a creative challenge: to design a course that was engaging and cohesive without relying on the visual assets of a particular game.

To address this, I leaned into Space Ape’s brand mascot, Albert, as a unifying character, already familiar to agents across different game titles.

Agents began the quiz by choosing a character and setting a name, which persisted throughout the quiz to give it a “visual novel” feel. Albert would guide them through the questions, offering friendly feedback along the way.

To make the quiz feel dynamic, I used variables and states to track and display each agent’s choices, all on a single Storyline slide for easy editing. This allowed Albert’s feedback and the visual elements to adapt to the player’s choices, enhancing personalization.

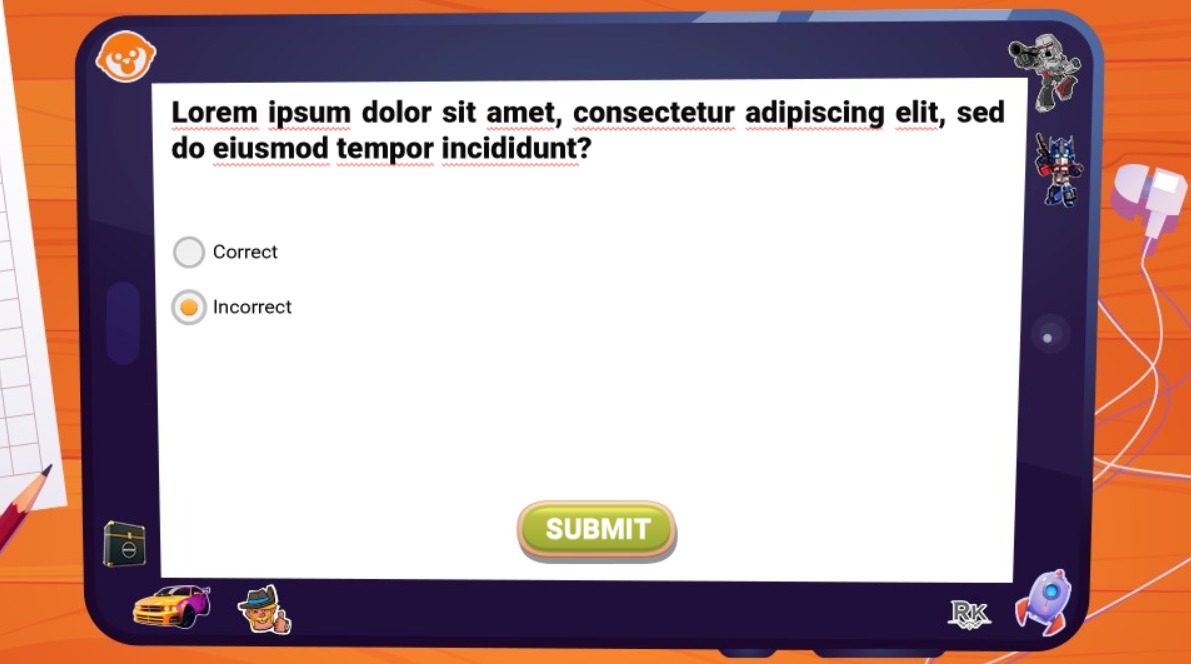

Without a dedicated artist to create custom assets for this new Space Ape universe, I sourced cohesive visuals from stock websites, giving the quiz a unified aesthetic. Albert’s tablet, where quiz questions appeared, featured stickers of chibi characters from each game title, symbolizing his “favorite characters” and building a connection to the Space Ape IPs. This subtle element reinforced Albert’s role as both a guide and a fellow fan of the Space Ape universe, enhancing agents’ sense of belonging to the brand.

Try the demo below (sound on for full experience):

Results and Takeaways

During the virtual class, agents eagerly shared their completed PC builds and discussed their choices, creating a collaborative and memorable experience. Agents remembered specific questions they failed that prevented them from getting specific parts. This allowed trainers to clarify the doubts with agent participation.

The combination of gamification and a familiar mascot provided a comfortable, interactive learning experience, helping agents see QA as an ally in their professional growth. Agents scored an average of 94% on the final quiz, highlighting the effectiveness of a themed, story-driven training approach.